The Problem

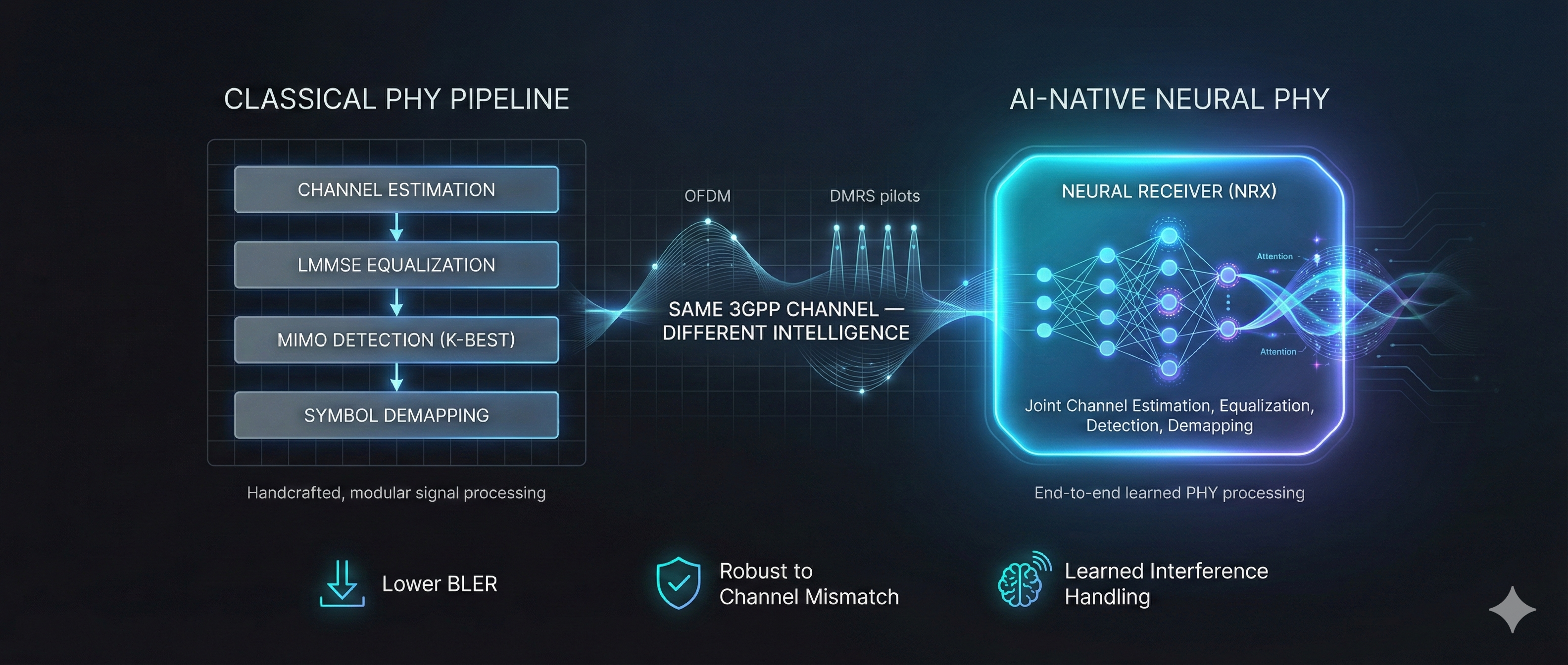

Traditional 5G communication systems rely on rigid, "hand-crafted" blocks for processing signals (Channel Estimation → Equalization → Demapping → Decoding). Each block is optimized independently, which is inefficient for complex channel environments with high interference.

The industry is moving toward AI-Native PHY, where the entire receiver chain is replaced by a single Neural Network (Neural Receiver) that learns to jointly optimize detection and estimation directly from data.

Current Approach

I am leveraging NVIDIA’s Sionna framework to simulate and benchmark these Neural Receivers (NRX). The core architecture involves a GNN (Graph Neural Network) combined with CNN layers to handle Multi-User MIMO scenarios.

- Environment Setup: Configured a custom TensorFlow/Sionna environment to run 5G NR uplink simulations, overcoming hardware limitations by optimizing for CPU execution where necessary.

- Simulation: Running Monte Carlo simulations to evaluate Bit Error Rate (BER) vs. Signal-to-Noise Ratio (Eb/No).

- Robustness Testing: Evaluating performance across TDL (Tapped Delay Line) channel models for both Single-User and Multi-User interference scenarios.

Research Impact & Goals

This project aims to demonstrate that a jointly optimized neural receiver can outperform classical baselines, particularly in non-linear channel conditions.

By training an end-to-end autoencoder, we are moving away from mathematically modeled blocks to a system that "learns" the physics of the channel[cite: 1]. This has the potential to:

- Significantly reduce Bit Error Rates (BER) in high-interference environments.

- Generalize better to unseen channel conditions compared to rigid classical algorithms.

- Pave the way for 6G standards where AI is native to the physical layer design.